TOC

Table of Contents

While nearly every computer has one, the Graphics Processing Unit (GPU) has evolved far beyond its original purpose. Today, it’s a powerhouse driving revolutions in artificial intelligence, scientific discovery, and immersive experiences. But what fundamentally is a GPU, and how does it differ from the Central Processing Unit (CPU) that serves as the traditional brain of a computer?

The Core Difference: Specialized Parallelism

The key lies in their architectural design philosophy:

CPU (Central Processing Unit)

Designed as a “jack-of-all-trades.” A typical modern CPU has a relatively small number of powerful, complex cores (e.g., 4, 8, 16, 32). These cores excel at handling diverse, sequential tasks quickly – running operating systems, applications, logic, and complex decision-making where tasks often depend on the results of previous ones (sequential processing). CPUs prioritize low latency, meaning getting a single task done as fast as possible (Intel, 2023; AMD, 2023).

GPU (Graphics Processing Unit)

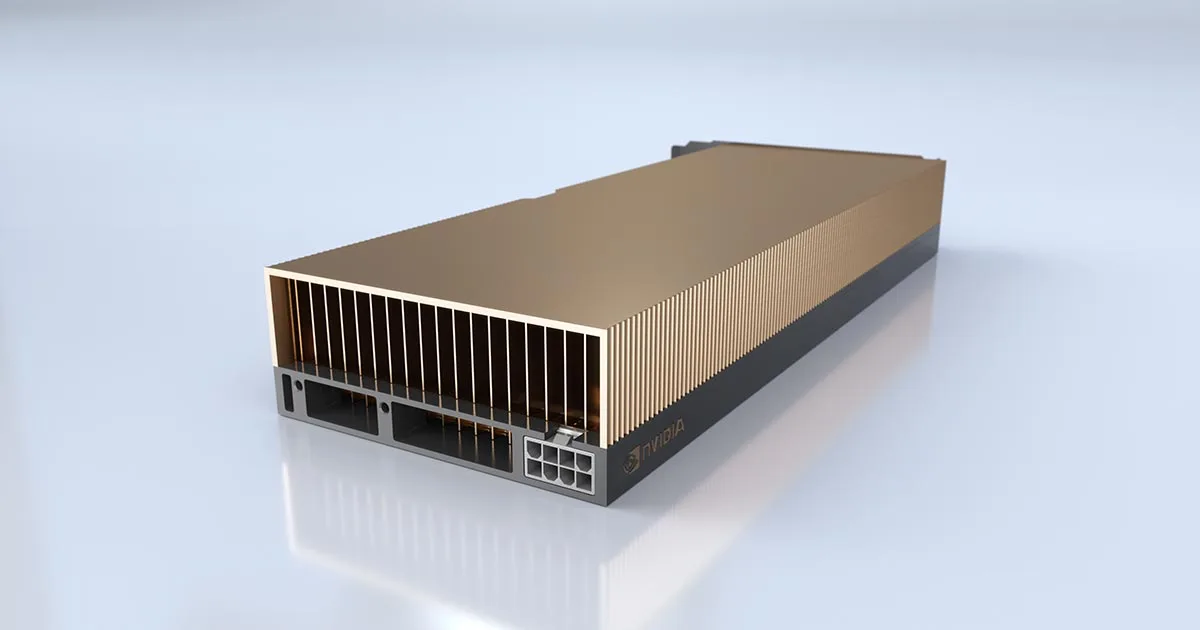

Designed as a “master of many similar tasks.” A GPU contains thousands of smaller, simpler, and highly efficient cores optimized for a specific type of workload: massively parallel processing. Rendering complex graphics involves performing the same mathematical operations (like calculating lighting, color, and position) simultaneously on millions of pixels or vertices. A GPU’s architecture is built to tackle vast numbers of these simple, repetitive calculations concurrently (NVIDIA, 2023).

Anatomy of a GPU: Built for Throughput

This parallel focus shapes the GPU’s physical and logical structure:

- Thousands of Cores: Modern GPUs pack thousands of processing cores (e.g., NVIDIA’s Ada Lovelace architecture boasts tens of thousands of CUDA Cores). While individually less powerful than a CPU core, their sheer number allows for immense throughput – processing massive datasets simultaneously (NVIDIA, 2023).

- Wide Memory Interface: To feed data to all these cores without bottlenecks, GPUs connect to specialized, high-speed Video RAM (VRAM) like GDDR6 or HBM2e via a very wide memory bus (e.g., 384-bit, 512-bit). This provides significantly higher memory bandwidth than typical CPU RAM (JEDEC, 2023).

- Specialized Hardware: Beyond generic cores, modern GPUs include dedicated hardware units:

- RT Cores (Ray Tracing): Accelerate complex calculations for realistic lighting and shadows in real-time rendering (NVIDIA, 2023).

- Tensor Cores: Specifically designed for the matrix multiplication operations that are fundamental to deep learning and AI workloads (NVIDIA, 2023; AMD, 2023 – referencing their Matrix Cores).

- Software Ecosystem: APIs like CUDA (NVIDIA), ROCm (AMD), and OpenCL allow programmers to harness the GPU’s parallel power for non-graphics tasks, known as General-Purpose computing on GPU (GPGPU) (Khronos Group, 2023; NVIDIA, 2023).

Beyond Pixels: The Rise of GPGPU

The realization that the GPU’s parallel architecture was incredibly efficient for certain non-graphics tasks sparked the GPGPU revolution. Any application that can be broken down into many smaller, independent tasks can potentially run much faster on a GPU:

- Artificial Intelligence & Deep Learning: Training complex neural networks involves performing billions of matrix multiplications across massive datasets – a task perfectly suited for the parallel nature and tensor cores of modern GPUs. This is the engine behind breakthroughs in image recognition, natural language processing, and more (IEEE Spectrum, 2021).

- High-Performance Computing (HPC): Scientific simulations (climate modeling, molecular dynamics, fluid dynamics) involve solving complex equations across vast grids or particle systems, inherently parallel problems where GPUs offer massive speedups over CPUs alone (Top500.org, 2023).

- Data Science & Analytics: Processing and analyzing massive datasets for trends, patterns, and insights benefits greatly from GPU acceleration in tasks like data filtering, transformation, and complex statistical modeling (TechTarget, 2022).

- Content Creation: Video editing (especially effects rendering and encoding), 3D animation rendering, and complex image processing leverage GPU power for significantly faster workflows (Adobe, 2023; Blender Foundation, 2023).

- Advanced Graphics: Of course, rendering increasingly realistic and complex scenes in games, virtual reality (VR), and augmented reality (AR) remains a core function, continuously pushing GPU technology forward.

Conclusion: The Parallel Workhorse

A GPU is no longer just a graphics card. It is a highly specialized parallel processor engineered for raw computational throughput on tasks that can be executed simultaneously. While the CPU remains essential for general system management and complex sequential logic, the GPU has become indispensable for accelerating the massively parallel workloads that define modern computing frontiers: AI, scientific discovery, large-scale data analysis, and ever-more immersive visual experiences. Its evolution from a graphics renderer to a general-purpose computational powerhouse underscores its critical role in our technological landscape.

Citations

- Adobe. (2023). GPU Acceleration in Adobe Applications. https://helpx.adobe.com/premiere-pro/system-requirements.html (Illustrates GPU use in professional software)

- AMD. (2023). AMD RDNA 3 Architecture. https://www.amd.com/en/graphics/amd-radeon-rx-7000-series-graphics (Details modern GPU architecture, including parallel cores and AI accelerators)

- Blender Foundation. (2023). Cycles Render Engine. https://www.cycles-renderer.org/ (Demonstrates GPU use in rendering)

- IEEE Spectrum. (2021). Why GPUs Are Great for AI. https://spectrum.ieee.org/gpu-computing (Explains the suitability of GPUs for AI)

- Intel. (2023). CPU Architecture. https://www.intel.com/content/www/us/en/products/details/processors.html (Contrasts CPU design philosophy)

- JEDEC. (2023). GDDR6 Standard. https://www.jedec.org/standards-documents/docs/jesd250c (Specifies high-bandwidth GPU memory standards)

- Khronos Group. (2023). OpenCL Overview. https://www.khronos.org/opencl/ (Standard for cross-platform GPGPU programming)

- NVIDIA. (2023). NVIDIA CUDA Programming Guide. https://docs.nvidia.com/cuda/ (Core reference for GPGPU programming on NVIDIA hardware)

- NVIDIA. (2023). NVIDIA Ada Lovelace Architecture. https://www.nvidia.com/en-us/geforce/graphics-cards/40-series/ (Details modern GPU architecture, cores, RT, Tensor)

- TechTarget. (2022). GPU Accelerated Analytics. https://www.techtarget.com/searchdatamanagement/definition/GPU-accelerated-analytics (Describes application in data analytics)

- Top500.org. (2023). November 2023 | TOP500. https://www.top500.org/lists/top500/2023/11/ (Shows prevalence of GPU acceleration in world’s fastest supercomputers)